(This blogpost was going to be just about a project I'm working on Gush,

but instead it's turned into a whole lot of backstory, and then a short

tutorial about Gush. If you're just

interested in the tutorial, skip to the bottom I guess.)

I recently wrote about possible routes for anti-abuse

systems. One of the goofier

routes I wrote about on there discussed genetic programming. I get the

sense that few people believe I could be serious... in some ways, I'm

not sure if I myself am serious. But the idea is so alluring! (And,

let's be honest, entertaining!) Imagine if you had anti-abuse programs

on your computer, and they're growing and evolving based on user

feedback (hand-waving aside exactly what that feedback is, which might

be the hardest problem), adapting to new threats somewhat invisibly from

the user benefiting from them. They have a set of friends who have

similar needs and concerns, and so their programs propagate and

reproduce with programs in their trust network (along with their

datasets, which may be taught to child programs also via a genetic

program). Compelling! Would it work? I dunno.

A different, fun use case I can't get to leave my mind is genetic

programs as enemy AI in roguelikes. After all, cellular

automata are a class

of programs frequently used to study genetic programs. And roguelikes

aren't tooooooo far away from cellular automata where you beat things

up. What if the roguelike adapted to you? Heck, maybe you could even

collect and pit your genetic program roguelike monsters against your

friends'. Roguelike Pokémon! (Except unlike in Pokémon, "evolving"

actually really means "evolving".)

Speculating on the future with Lee Spector

Anyway, how did I get on this crazy kick? On the way back from

LibrePlanet (which went quite well, and deserves its own blogpost), I

had the good fortune to be able to meet up with Lee

Spector. I had heard of Lee

because my friend Bassam Kurdali works in the same building as him in

Hampshire College, and Bassam had told me a few years ago about Lee's

work on genetic algorithms. The system Lee Spector works on is called

PushGP (Push is the

stack language, and PushGP is Push used for genetic programming). Well,

of course once I found out that Push was a lisp-based language, I was

intrigued (hosted on lisps traditionally, but not always, and Push

itself is kind of like

Lisp

meets

Forth),

and so by the time we met up I was relatively familiar with Lee's work.

Lee and I met up at the Haymarket Cafe, which is a friendly coffee shop

in Northampton. I mentioned that I had just come from LibrePlanet where

I had given a talk on The Lisp Machine and

GNU.

I was entertained that almost immediately after these words left my

mouth, Lee dove into his personal experiences with lisp machines, and

his longing for the kind of development experiences lisp machines gave

you, which he hasn't been able to find since. That's kind of an aside

from this blogpost I suppose, but it was nice that we had something

immediately to connect on, including on a topic I had recently been

exploring and talking about myself. Anyway, the conversation was pretty

wild and wide-ranging.

I had also had the good fortune to speak to Gerald

Sussman again at

LibrePlanet this year (he also showed up to my

talk

and answered some questions for the audience). One thing I observed in

talking with both Sussman and Spector is they're both very interested in

thinking about where to bring computing in terms of examining biological

systems, but there was a big difference in terms of their ideas; Sussman

is very interested in holding machines "accountable", which seems to

frequently also mean being able to examine how they came to conclusions.

(You can see more of Sussman's thinking about this in the writeup I did

of the first time I ever got to speak with

Sussman when I was at FSF's 30th anniversary

party... maybe I should try to capture some information about the most

recent chat too, before it gets lost to the sands of time...) Spector,

on the other hand, seems convinced that to make it to the next level of

computing (and maybe even the next level of humanity), we have to be

willing to give up on demanding that we can truly understand a system,

and that we have to allow processes to run wild and develop into their

own things. There's a tinge of Vernor Vinge's original vision of the

Singularity,

which is that there's a more advanced level of intelligence than humans

are able to currently comprehend, and to understand it there you have to

have crossed that boundary yourself, like the impossibility of seeing

past the event horizon of

a black hole. (Note that this is a pretty different definition of

singularity from some of the current definitions of Singularity that

have come since, which are more about possible outcomes of such a change

rather than about the concept of an intellectual/technological event

horizon itself.) That's a possible vision for the future of humanity,

but it's also a vision of what maybe the right direction is for our

programming too.

Of course, this vision that code may be generative in a way where the

source is mostly unintelligible to us feels possibly at odds to our

current understanding of software freedom, a movement which spends a lot

of time talking about inspecting source code. The implications of that

is a topic of interest of mine (I wrote about it in the FSF bulletin

not too long

ago)

and I did needle Spector about it... what does copyright mean in a world

where humans aren't writing software? Spector seems to acknowledge that

it's a concern (he agrees that examples of seed-DRM and genetic patents

in the case of Monsanto are troubling) but I got the sense that it's not

his biggest interest... Spector thinks that the future of that side of

software freedom and copyright might be that humanity realizes how

absurd software copyright and patents and other intellectual restriction

regimes are once things generate far enough. But I get the sense that

more than talking about the legal/licensing aspects of the

auto-generative future, Spector would rather be building it. Fair

enough!

Anyway, somewhere along these lines I mentioned my interest in

distributed anti-abuse systems. We talked about how more basic

approaches such as Bayesian filtering might not be good enough to combat

modern abuse beyond just spam especially, because the attack patterns

taken change so frequently. Suddenly it hit me: I wonder whether or not

genetic programs would work pretty well in a distributed system... after

all, you could use your web of trust to breed the appropriate filtering

programs with your friends' programs... would it work?

Anyway, on the ride back I began playing with some of Push's ideas, and

(with a lot of helpful feedback from Lee Spector... thank you, Lee!) I

started to put together a toy design for a language inspired by PushGP

but with some properties that I think might be more applicable to an

anti-abuse system that needs to keep around "memories" between

generations. (Whether it's better or not, I don't really know yet.)

So...

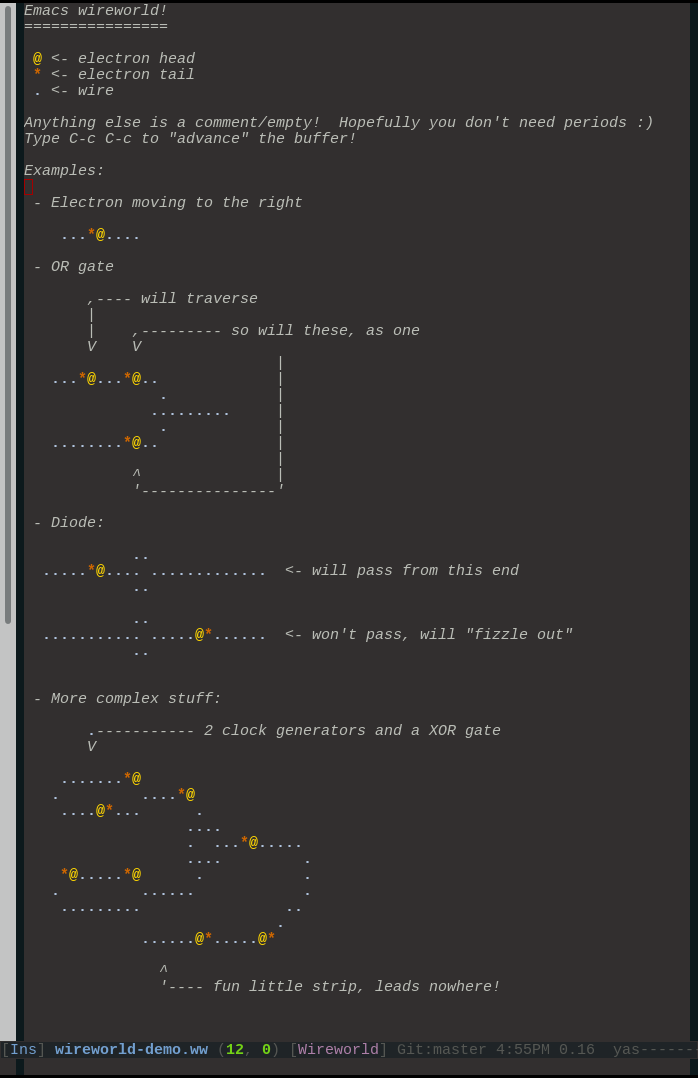

A little Gush tutorial

At this point, Gush exists. It has

the stack based language down, but none of the genetic programming.

Nonetheless, it's fun to hack around in, looks an awful lot like Push

but also is far enough along to demonstrate its differences, and if

you've never played with a stack based language before, it might be a

good place to start.

Let's do some fun things. First of all, what does a Push program look

like?

(1 2 + dup *)

Ok, that looks an awful lot like a lisp program, and yet not at the same

time! If you've installed Gush, you can run this example:

> (use-modules (gush))

> (run '(1 2 + dup *))

$1 = (9)

Whoo, our program ran! But what happened? Gush programs operate on two

primary stacks... there's an "exec" stack, which contains the program

being evaluated in progress, and a "values" stack, with all the values

currently built up by the program. Evaluation happens like so:

exec> '(1 2 + dup *) vals> '() exec> '(2 + dup *) vals> '(1) exec> '(+ dup *) vals> '(2 1) exec> '(dup *) vals> '(3) exec> '(*) vals> '(3 3) exec> '() vals> '(9)

Okay, great! What else can we run?

> (run '(3 2 / 4 +))

$2 = (14/3)

> (run '(88 'foo define foo 22 +))

$3 = (110)

> (run '(1 "two" 3 +))

$4 = (4 "two")

> (run '(98 ("balloons" "red") 1 +))

$5 = (99 "red" "balloons")

> (run '(98 "balloons" "red" 1 +))

$6 = (99 "red" "balloons")

> (run '('+ 'foo define 88 'foo var-push 2 foo)) $7 = (90)

> (run '(1 1 + 'b define 2 b = if "two b" "not two b")) $8 = ("two b")

There's more to it than that, but that should get you started.

How is Gush different from Push?

Gush takes almost all its good ideas from Push, but there are two big

differences.

Both Gush and Push try to avoid type errors. You can do all sorts of

code mutation, and whether or not things will actually do anything

useful is up for grabs, but it shouldn't crash to a halt. The way Push

does it is via different stacks for each value type. This is really

clever: it means that each operation applies to very specific types, and

if you always know your input types carefully, you can always be safe on

a type level and shouldn't have programs that unexpectedly crash (if

there aren't enough values on the appropriate type stack, Push just

no-ops).

But what if you want to run operations that might apply to more than one

type? For example, in Gush you might do:

> (run '(1 2 / 4.5 +))

$9 = (6.5)

In Push, you'd probably do something like this:

> (run '(1 2 INTEGER./ FLOAT.FROMINTEGER 4.5 FLOAT.+))

$9 = (6.5)

(And of course, if you wanted rational numbers rather than just floats,

you'd have to add another type stack to that...)

I really wanted generic methods that were able to determine what types

they were able to apply to. For one thing, imagine you have a program

that's doing a lot of complicated algebra... it should be able to

operate on a succession of numbers without having to do type coercion

and hit/miss on whether it chose the right of several typed operators,

when it could just pick one operator that can apply to several items.

I also wanted to be able to add new types without much difficulty. As it

is, I don't have to rewire anything to throw hash-maps into Gush:

> (run '(42 "meaning of life" make-hash hash-set))

$10 = (<hash-table>)

This would just work, no need to wire anything new up!

The way Gush does it is it uses generic operators which know how to

check the predicates for each type, and which "search the stack" for

values it knows it can apply. (It also no-ops if it can't find

anything.) If bells and alarms are going off, you're not wrong! In

Gush's current implementation, this does have the consequence that any

given operation might be worst case O(n) of the size of the values

stack! Owch! However, I'm not too worried. Gush checks how many

operations every program takes (and has the option to bail out if a

program is taking too many steps) and searching the stack after failing

to match initially counts against a program. I figured that if programs

are auto-generated, one fitness check can be how many steps it takes for

the program to finish its computation, and so programs would be

incentivized to keep the appropriate types near where they would be

useful. I'm happy to say that it turns out I'm not the only one to think

this; unknown to me when I started down this path, there's another Push

derivative named

Push-Forth

which has only one stack altogether (not even separate stacks for exec

and values!) and it does some similar-ish (but not quite the same)

searching (or converging on a fixed point) by currying operations until

the appropriate types are available. (Pretty cool stuff, but to be

honest I have a hard time following the Push-Forth examples I've seen.)

It comes to the same conclusion that by checking the number of steps a

program takes to execute as part of its fitness, programs will be

encouraged to keep types in good places anyway. However! There's more

reasons to not despair; I'm relatively sure that there are some clever

things that can be done with Gush's value stack so that predicate

information is cached and looking for the right type can be made O(1).

That has yet to be proven though. :)

The other feature Gush differentiates itself from Push is that Gush

variables are stacks rather than single values. This ties in nicely with

the classic Push approach that lists are unwrapped and applied to the

exec stack at the time they are to be evaluated anyway, so it makes no

behavioral change in the case that you just use "define" (which will

always clobber the state of the stack, whether or not it exists, to

replace it with a stack with a single element of the new value). But it

also allows you to build up collections of information over time... or

even collections of code. An individual variable can be appended to and

modified as the program runs, so you could write or even modify

subroutines to variables. (Code that writes code! Very lispy, but also a

bit crazier because it might happen at runtime.) Push also has this

feature, but it has one specific, restricted stack for it, named the

CODE stack appropriately. Why have one of these stacks, when you could

have an unlimited number of them?

That wasn't the original intent for having variables as values though; I

only realized that you could make each variable into a kind of CODE

stack later. My original intent was driven by a concern/need to be able

to carry information from parent process to child process. I added a

structure to Gush programs named "memories", and I figured that parent

programs could "teach" their memories to child programs. So this was

really just a hash table of symbols to stacks that persisted after the

program ended (which, since if you use run-application you get the whole

state of the program as the same immutable structure that is folded over

during execution, you have that information attached to the application

anyway). The idea of "memories" was that parent programs could have

another program that, after spawning a child program, could "teach" the

things they knew to their children (possibly either by simply copying,

or more likely through a separate genetic program applied to that same

data). That way a database of accrued information could be passed around

from generation to generation... a type of genetic programming

educational system (or folklore). So that was there, but then when I

began adding variables around the same time I realized that a variable

that contained a single value and which was pushed onto to the exec

stack was, due to the way Push "unwraps" lists, exactly the same as if

there was just that variable alone pushed onto the stack. Plus, it

seemed to open up more paths by having the cool effect of having any

variable be able to take on the power of Push's CODE stack. (Not to

mention, removing the need for a redundant CODE stack!)

Are these really improvements? I don't know, it's hard to say without

actually testing with some genetic programming examples. That part

doesn't exist yet in Gush... probably I'll follow the current lead of

the Push community and do mutation on the linearized Plush

representation of Push

code.

Anyway, I also want to give a huge thank you to Lee Spector. Lee has

been really patient in answering a lot of questions, and even in the

case that Gush does have some improvements, they're minor tweaks

compared to the years of work and experimentation that has gone into the

Push/PushGP designs.

And hey, it was a lot of fun! Not to mention, a great way to

procrastinate on the things I should be working on...

{#Protesting EME before the Microsoft building; CC BY 3.0}

{#Protesting EME before the Microsoft building; CC BY 3.0}